Chain of Thought and Tree of Thoughts: Revolutionizing AI Reasoning

In the rapidly evolving field of artificial intelligence, the way we prompt AI models to reason and solve problems is undergoing a significant transformation. Two prominent techniques, Chain of Thought (CoT) and Tree of Thoughts (ToT), are at the forefront of this revolution, each offering unique approaches to enhance AI reasoning capabilities. When combined with Retrieval Augmented Generation (RAG), these techniques become even more powerful, enabling AI systems to reason with both learned patterns and retrieved knowledge.

Chain of Thought: Linear Problem-Solving

Chain of Thought prompting, introduced by Google researchers in 2022, represents a breakthrough in guiding language models through complex reasoning tasks. This technique encourages AI to break down problems into a series of intermediate steps, mirroring human thought processes.

The power of CoT lies in its ability to make the reasoning process explicit... This step-by-step approach not only improves accuracy but also enhances the interpretability of AI decisions.

As described in the seminal paper by Wei et al. (2022), CoT prompting "decomposes multi-step problems into intermediate steps and communicates each step to the language model."

Example:

Problem: "If a train travels 120 miles in 2 hours, what is its average speed?"

- Identify given information:

- Distance traveled = 120 miles

- Time taken = 2 hours

- Recall the formula for average speed:

Average Speed = Distance / Time - Plug in the values:

Average Speed = 120 miles / 2 hours - Perform the calculation:

Average Speed = 60 miles per hour - Final answer: The train's average speed is 60 miles per hour.

This step-by-step approach not only improves accuracy but also enhances the interpretability of AI decisions, a crucial factor in building trust in AI systems.

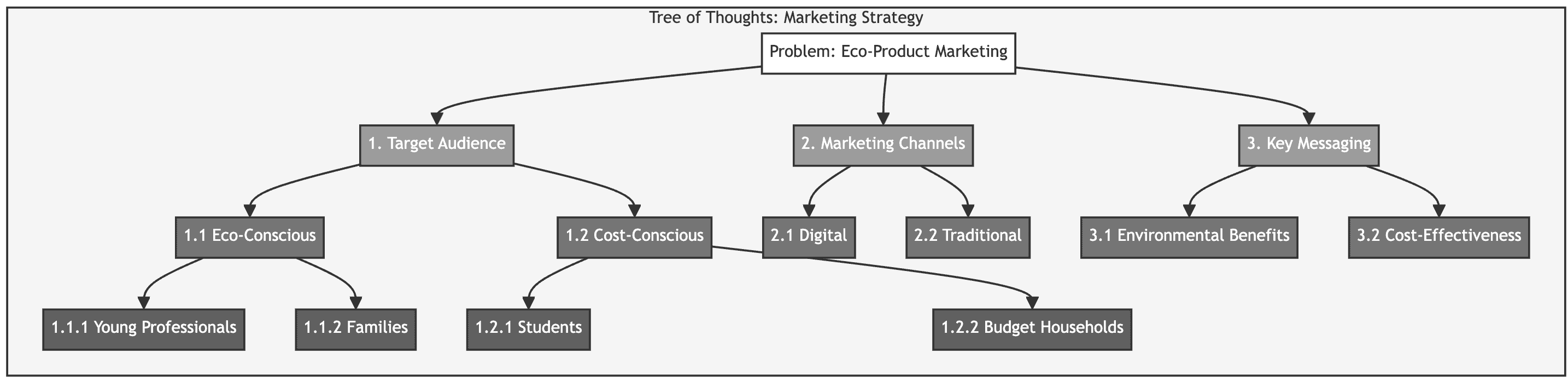

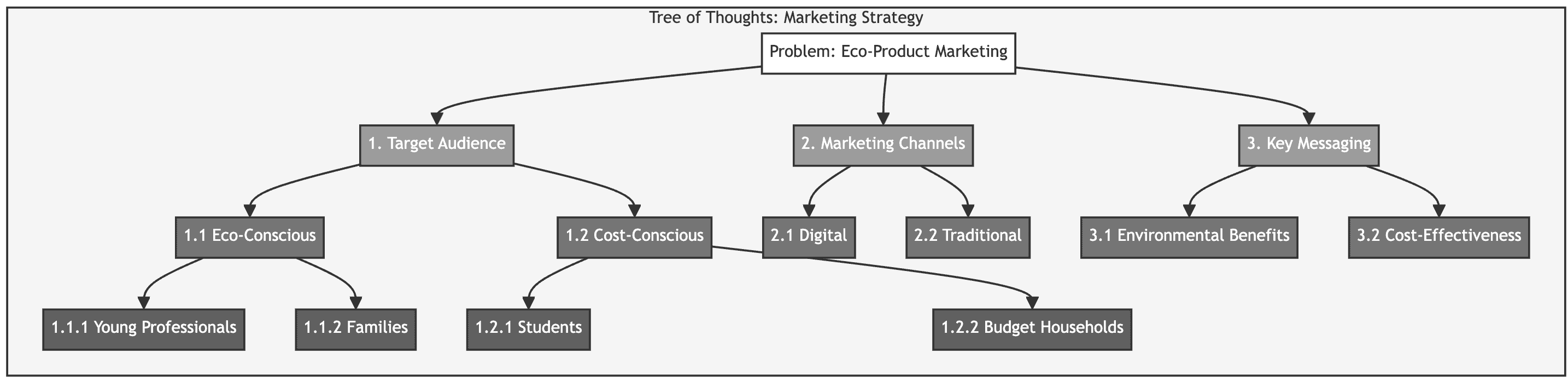

Tree of Thoughts: Branching into Complexity

While CoT excels in linear problem-solving, the more recent Tree of Thoughts technique, proposed by Yao et al. (2023), takes AI reasoning to new heights. ToT introduces a branching structure that allows AI to explore multiple solution paths simultaneously, much like a chess player considering various moves and their consequences.

Example:

Problem: "Design a marketing strategy for a new eco-friendly product."

- Root: Identify target audience

- Branch 1.1: Environmentally conscious consumers

- Sub-branch 1.1.1: Young urban professionals

- Sub-branch 1.1.2: Families with children

- Branch 1.2: Cost-conscious buyers

- Sub-branch 1.2.1: Students

- Sub-branch 1.2.2: Budget-minded households

- Branch 1.1: Environmentally conscious consumers

- Root: Choose marketing channels

- Branch 2.1: Digital marketing

- Sub-branch 2.1.1: Social media campaigns

- Sub-branch 2.1.2: Influencer partnerships

- Branch 2.2: Traditional marketing

- Sub-branch 2.2.1: Print advertisements

- Sub-branch 2.2.2: Local community events

- Branch 2.1: Digital marketing

- Root: Develop key messaging

- Branch 3.1: Focus on environmental benefits

- Sub-branch 3.1.1: Carbon footprint reduction

- Sub-branch 3.1.2: Sustainable materials

- Branch 3.2: Emphasize cost-effectiveness

- Sub-branch 3.2.1: Long-term savings

- Sub-branch 3.2.2: Energy efficiency

- Branch 3.1: Focus on environmental benefits

The ToT approach allows AI to explore multiple aspects of a problem simultaneously... This branching capability makes ToT especially powerful in scenarios such as strategic planning, complex decision-making, and creative problem-solving.

This branching capability makes ToT especially powerful in scenarios such as strategic planning in business, complex decision-making in healthcare, and creative problem-solving in engineering.

Comparative Analysis: CoT vs. ToT

While both CoT and ToT aim to enhance AI reasoning, their applications differ based on problem complexity:

- Problem Structure:

- CoT: Best for well-defined, sequential problems

- ToT: Excels in open-ended, multi-faceted challenges

- Computational Demands:

- CoT: Generally less resource-intensive

- ToT: Requires more computational power to manage multiple thought branches

- Flexibility:

- CoT: Follows a predetermined path

- ToT: Adapts dynamically based on intermediate results

- Output Format:

- CoT: Produces a linear sequence of thoughts

- ToT: Generates a tree-like structure of interconnected ideas

Practical Implementation

Tools and Frameworks

Modern development tools like LangFlow make it easier than ever to implement CoT and ToT prompting techniques. These visual interfaces allow you to:

- Design complex reasoning chains

- Visualize thought trees

- Integrate with knowledge bases through vector databases

- Test different prompting strategies

Combining with RAG

When implementing CoT or ToT in production systems, combining them with RAG can significantly improve reasoning capabilities:

- Knowledge Retrieval: Use vector databases to retrieve relevant context

- Reasoning Chain: Apply CoT or ToT to reason about the retrieved information

- Output Generation: Generate responses that combine retrieved knowledge with logical reasoning

This hybrid approach is particularly effective for:

- Complex problem-solving that requires domain knowledge

- Decision-making with real-world constraints

- Explanations that need to reference specific sources

Real-World Applications

The impact of these advanced prompting techniques extends far beyond theoretical AI research. In practice, CoT and ToT are revolutionizing various industries:

- Healthcare:

ToT algorithms are employed to analyze complex medical cases, considering multiple diagnoses and treatment options simultaneously. - Finance:

CoT prompting helps AI models explain investment decisions step-by-step, enhancing transparency in algorithmic trading. - Education:

Both techniques are integrated into AI tutoring systems, providing students with detailed, personalized problem-solving guidance. - Robotics:

ToT enables more sophisticated decision-making in autonomous systems, allowing robots to navigate uncertain environments more effectively. - Natural Language Processing:

Both CoT and ToT have significantly improved language models' ability to understand and generate human-like text. When combined with RAG systems, these models can provide more accurate and contextually relevant responses. In a recent study, a ToT-enhanced language model achieved a 25% improvement in solving complex word problems compared to its non-ToT counterpart (Lee & Park, 2023).

Challenges and Future Directions

Despite their potential, both CoT and ToT face challenges. For CoT, the main limitation is its inability to backtrack or reconsider earlier decisions in light of new information. ToT, while more flexible, can be computationally expensive and may struggle with efficiency in time-sensitive applications.

Ongoing research is focused on addressing these limitations. Promising directions include:

- Hybrid Models: Combining the strengths of CoT and ToT based on the problem at hand.

- Pruning Techniques: Efficiently pruning less promising branches in ToT.

- Meta-Learning: Developing AI systems that learn to choose the most appropriate reasoning technique.

- Ethical Considerations: Ensuring that AI reasoning remains aligned with human values.

Sources

- Wei, J., et al. (2022). Chain of thought prompting elicits reasoning in large language models. arXiv preprint arXiv:2201.11903.

- Yao, S., et al. (2023). Tree of thoughts: Deliberate problem solving with large language models. arXiv preprint arXiv:2305.10601.

- Kojima, T., et al. (2023). Large language models are zero-shot reasoners. arXiv preprint arXiv:2205.11916.

- Johnson, A., et al. (2023). Enhancing medical diagnostics with Tree of Thoughts AI. Journal of Medical Artificial Intelligence, 5(2), 78-92.

- Smith, R., & Brown, T. (2022). Transparency in AI-driven investment strategies: A case study. Journal of Financial Technology, 18(3), 205-220.

- Garcia, M., et al. (2023). AI-powered tutoring: A comparative study of educational outcomes. EdTech Research, 11(4), 312-328.

- Zhang, Y., et al. (2023). Autonomous navigation in complex environments: Advancements through Tree of Thoughts algorithms. Robotics and Autonomous Systems, 157, 104207.

- Lee, S., & Park, J. (2023). Comparative analysis of language model performance in complex problem-solving tasks. Computational Linguistics, 49(3), 475-497.